Online Convolutional Dictionary Learning for Multimodal Imaging:

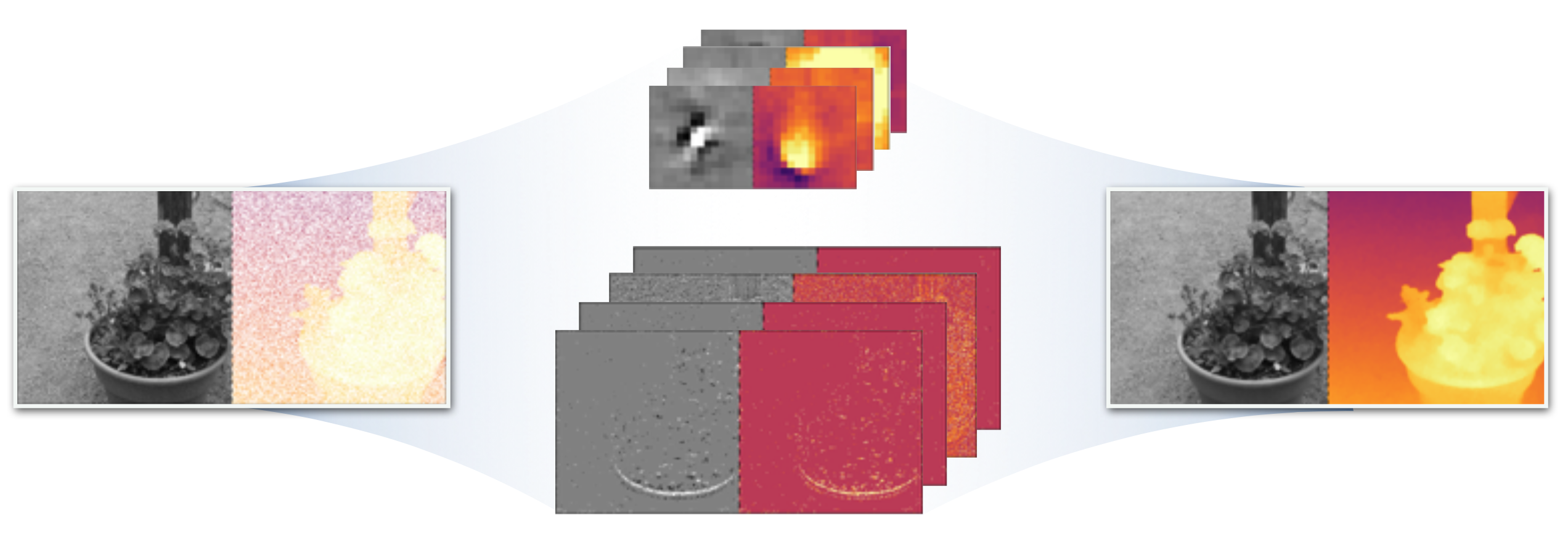

Computational imaging methods that can exploit multiple modalities have the potential to enhance the capabilities of traditional sensing systems. In this work, we propose a new method that reconstructs multimodal images from their linear measurements by exploiting redundancies across different modalities. Our method combines a convolutional group-sparse representation of images with TV regularization for high-quality multimodal imaging. We develop an online algorithm that enables the unsupervised learning of convolutional dictionaries on large-scale datasets that are typical in such applications.

Computational imaging methods that can exploit multiple modalities have the potential to enhance the capabilities of traditional sensing systems. In this work, we propose a new method that reconstructs multimodal images from their linear measurements by exploiting redundancies across different modalities. Our method combines a convolutional group-sparse representation of images with TV regularization for high-quality multimodal imaging. We develop an online algorithm that enables the unsupervised learning of convolutional dictionaries on large-scale datasets that are typical in such applications.Oct 23, 2017

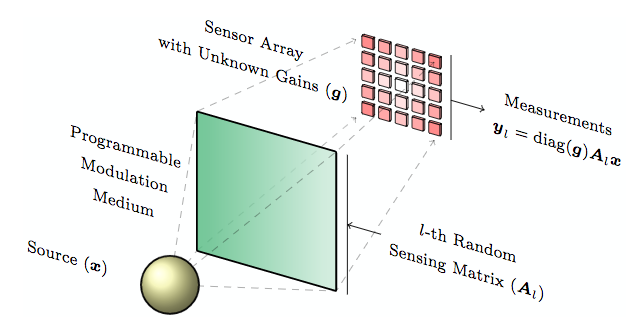

Computational imaging methods that can exploit multiple modalities have the potential to enhance the capabilities of traditional sensing systems. In this work, we propose a new method that reconstructs multimodal images from their linear measurements by exploiting redundancies across different modalities. Our method combines a convolutional group-sparse representation of images with TV regularization for high-quality multimodal imaging. We develop an online algorithm that enables the unsupervised learning of convolutional dictionaries on large-scale datasets that are typical in such applications.Oct 23, 2017 A research effort in the solution of blind calibration and deconvolution problems arising in compressive imaging.Sep 19, 2017

A research effort in the solution of blind calibration and deconvolution problems arising in compressive imaging.Sep 19, 2017