Target tracking for the automatic control of Pan Tilt Zoom cameras

Capturing close-up video sequences of an object of interest evolving in a large field of view often requires to cover this field of view with tens of cameras. This is especially the case in surveillance and sport coverage contexts. The use of Pan-Tilt-Zoom cameras allows zooming and focusing on an object along its displacement with a single camera, but requires a sufficiently reliable feedback about the target position/trajectory from the image processing module in order to perform high quality automatic tracking.

A new framework for target motion inference using point matching

Tracking can be defined as recursively matching an object of interest between two successive frames of a video sequence. We propose an original framework for solving this matching problem using a set of points to describe the target. Modeling an object with points allows dealing with zoom changes, which is difficult with a global object appearance model. In our formulation, the target displacement inference error is minimized regarding the choice of points to match and their associated matching metrics, i.e. the associated (descriptor, matching distance) pair. There are major differences with classical point matching approaches :

- There is no seeking for exact point-to-point correspondence between two frames. A point from the first framed is compared with the possible corresponding candidates inside a search window in the second frame, providing to each hypothesis a similarity measure. The generated “probability maps” of several points are combined to infer the global target displacement.

- Different matching metrics can be used for different points. In this work, matching metrics are adapted to the point neighborhood content, so as to best discriminate it from its neighbors.

- Points are not chosen based on a content-type constraint that is chosen intuitively and a priori (corner, edges, etc.). Instead, points are selected regarding the global target motion inference objective, which ends up in selecting a set of complementary points. The points are complementary in that they contribute to the object displacement inference by rejecting different displacements hypotheses.

Related paper: Quentin De Neyer, Christophe De Vleeschouwer, A resource allocation framework for adaptive selection of point matching strategies, ACIVS 2013, Poznan, Poland (dial)

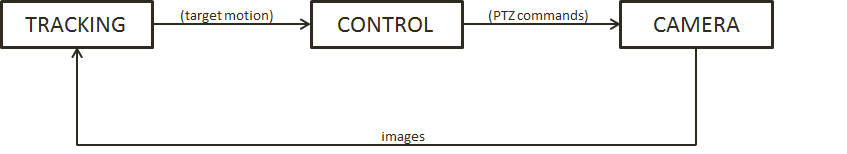

Integrated system for the control of PTZ cameras

A simplified closed-loop control system involving the motorized camera and the image processing (point-based tracking) module is depicted on the figure below. The feedback is given by the detected position of the target, the setpoint being the center of the camera field of view. We use a PI controller and test the system with an original method by projecting pre-recorded videos on a screen. The system then tracks the projected object of interest. This allows to perform reproducible and well controlled tests.

The principal tasks for setting up a PTZ control systems are :

- The choice of the P and I parameters of the controller to find the right compromise between reactivity of the system and smoothness of the camera motion.

- The calibration of camera (translation of pixel displacements into angular displacements, as a function of the zoom level)

Our system was demonstrated during the International Conference on Distributed Smart Cameras (ICDSC) in 2012.

Related paper: De Neyer, Q.; Sun, L.; Chaudy, C.; Parisot, C.; De Vleeschouwer, C., _“Demo: Point matching for PTZ camera autotracking,” 2012 Sixth International Conference on Distributed Smart Cameras (ICDSC), vol., no., pp.1,2, Oct. 30 2012-Nov. 2 2012 (dial)