Compressive Statistical Learning

Context

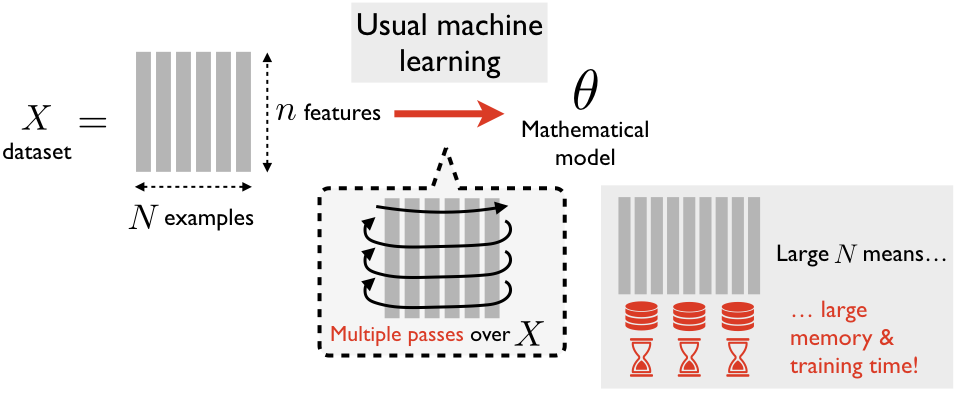

Machine learning is the science (or art, depending on who you ask) of automatically discovering a mathematical model $\theta$ (such as a prediction rule) from a large set of learning examples $X$, instead of hand-crafting $\theta$ from human expertise (see Figure 1). In recent years, machine learning earned its place in the spotlight by achieving impressive success, beating traditional solutions by a large margin on a wide variety of challenging tasks (e.g., in computer vision, general inverse problems, artificial intelligence…).

This success can be explained by two main factors. The obvious one is the improvement of machine learning techniques thanks to decades-long research by a growing community. The second one, more easily taken for granted, is the ever-increasing abundance of data to learn from, a trend commonly called “Big Data” or “Data Deluge”. This second slightly-more-threatening nickname is closer to the truth: while the enormous size of modern datasets allows to train more sophisticated models, it also poses several—often overlooked—difficult problems.

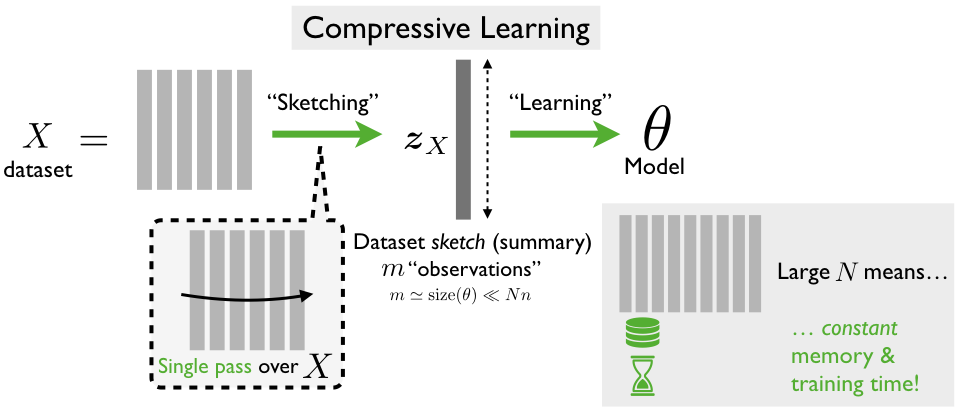

Amongst others, we focus on the explosion of the computational resources needed to handle such datasets. Because traditional machine learning algorithms need access to the data multiple times during training, large memory storage is required to store all this data, and reading it repeatedly takes a lot of time. One attempt to circumvent this issue is compressive statistical learning (also known as sketched learning), a framework introduced by Rémi Gribonval, Nicolas Keriven and co-authors [Entry not found - gribonval2021compressive] [Entry not found - keriven2018sketching]. The idea is to break down the expensive learning task from the “raw” dataset into two cheaper steps: sketching and then the actual “compressive learning" (see Figure 2).

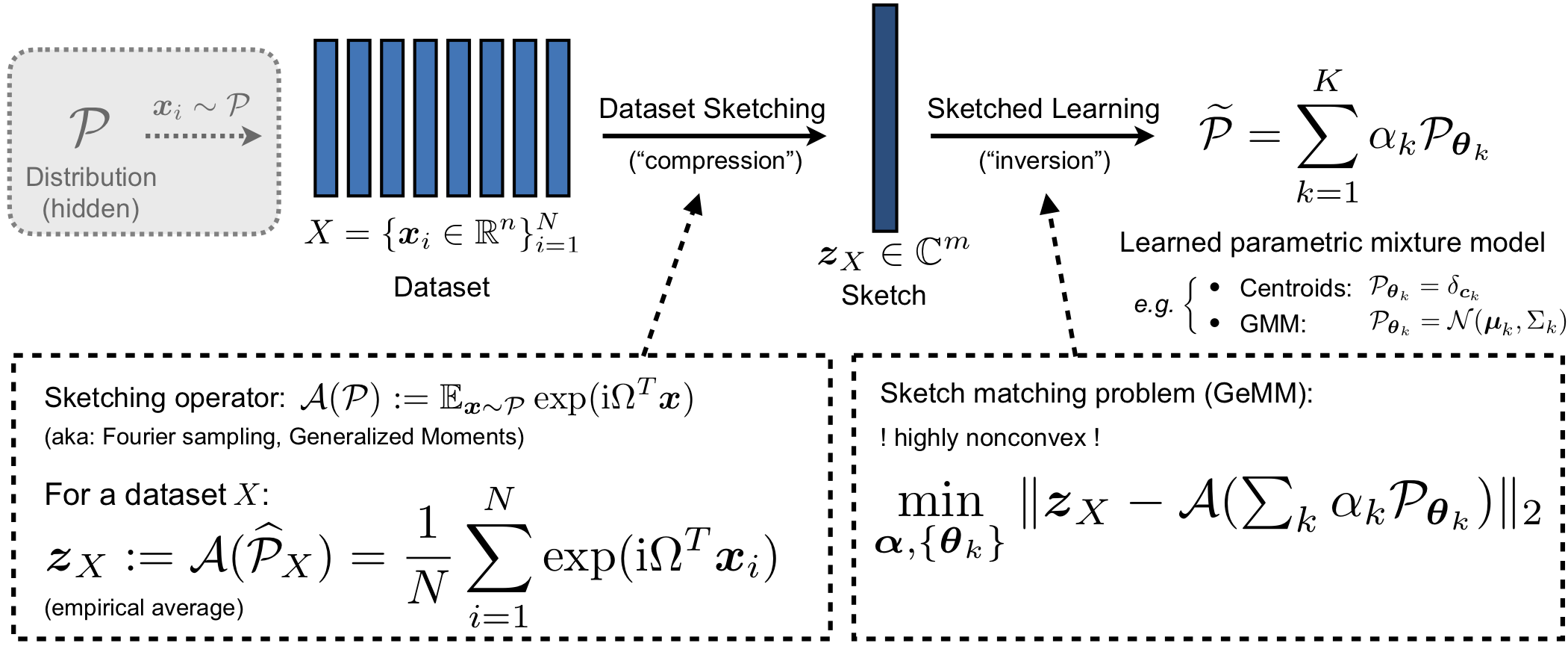

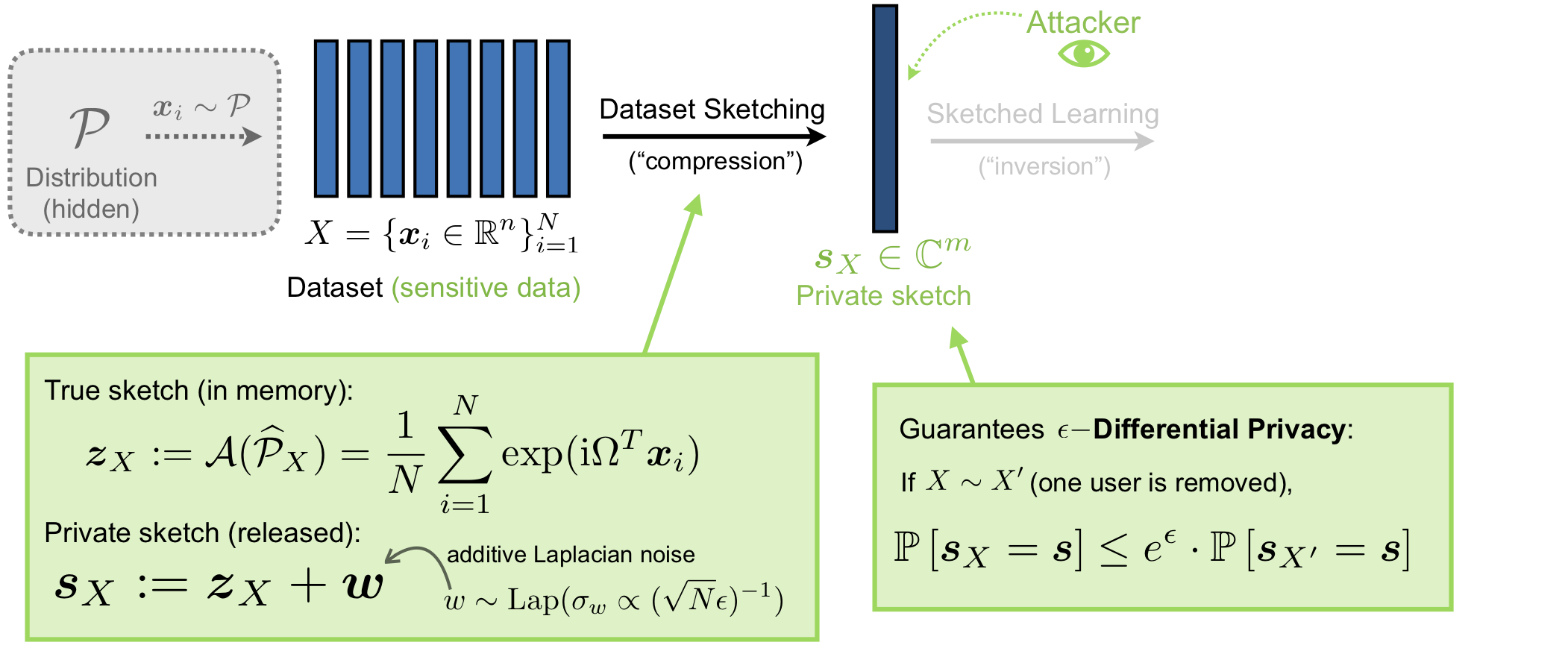

Sketching means compressing the dataset (i.e., as a whole instead of compressing each learning sample independently as is often done). This is a change in perspective: instead of seeing the dataset $X$ as a collection of distinct learning examples, we see it as an (empirical) probability distribution $\widehat{\mathcal{P}}_X$. The goal is then to compress this probability distribution (which is the true “signal” of interest here) while preserving some aspects of its geometry. Inspired by compressed sensing, we can do this by computing a set of (randomly generated) generalized moments of the data (i.e., linear projections of the signal of interest!). More intuitively, the sketch is the empirical average of some features of the dataset; this process can be thought of as constructing a generalized “histogram" of the dataset (see Figure 3, left). The resulting “measurement vector” $\boldsymbol{z}_X$, called the sketch, acts as a summary for the whole dataset. By construction its size does not grow with the number of examples $N$ in the dataset. Moreover, the sketch is light to compute (can be done in parallel, on-line, on decentralized machines,…) and allows to delete the data after only looking at it only once: sketching thus scales very well with modern massive datasets.

Compressive learning now amounts to extracting the target mathematical model $\theta$, from the lightweight sketch $\boldsymbol{z}_X$ only (requiring potentially far less computational resources than usual learning). Following the parallel with compressive sensing, this step involves solving an inverse problem, where we try to reconstruct an approximation to the probability distribution $\mathcal{P}$ that supposedly generated the dataset, according to some “prior” determined by the actual machine learning task we try to solve. For example, for Gaussian mixture modelling, we assume that the observed probability distribution (the dataset) is well-approximated by a mixture of a few Gaussian distributions, and seek the Gaussian mixture that fits the best the sketch (the observations). Another example is k-means clustering, where we assume that the empirical density associated with the dataset is well-approximated by a mixture of a few Dirac distributions (see Figure 3, right).

Our project(s)

In this research project, we work on several distinct—yet connected—aspects of compressive learning.

Cheaper sketching

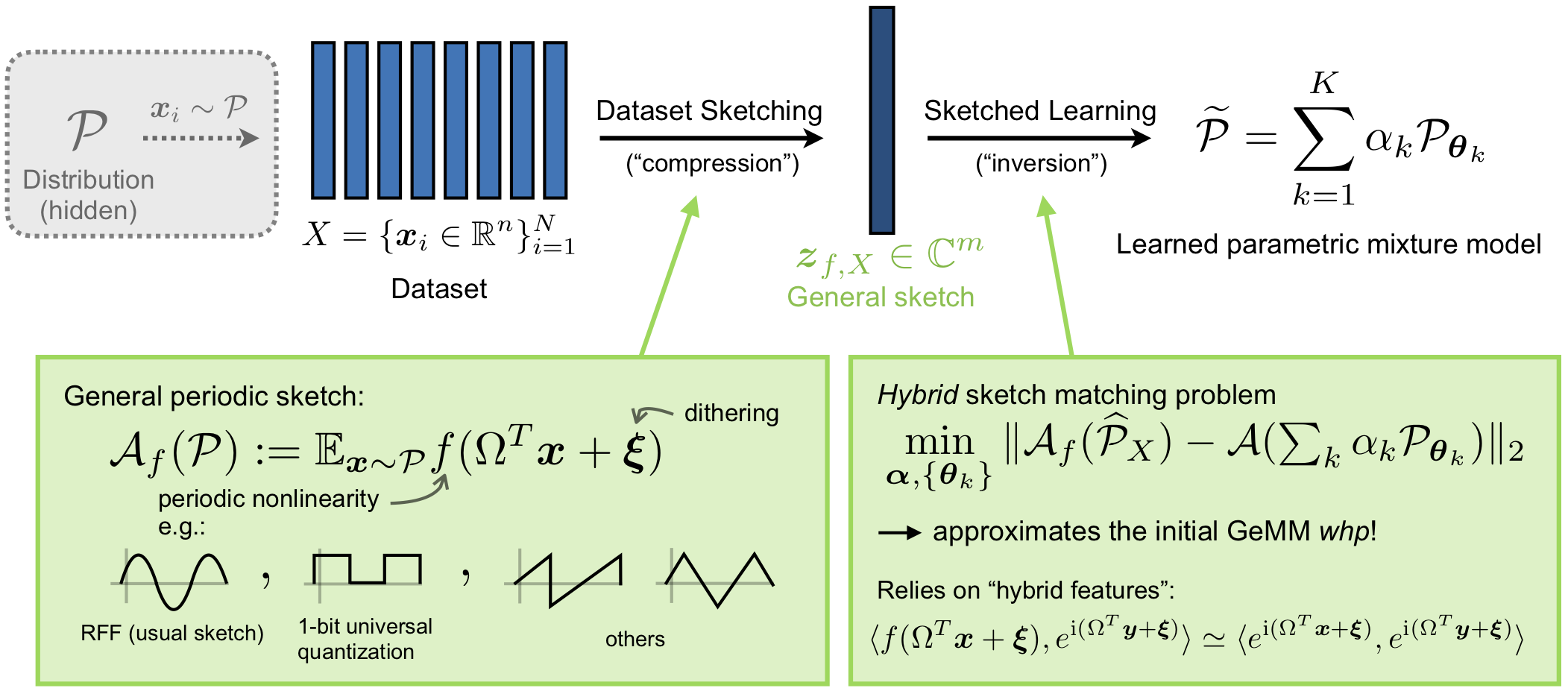

Computing the sketch of a dataset can be done in a single pass, but still requires to handle all the high-resolution samples in software, which can take a significant amount of time. The bottleneck lies in a nonlinear map (usually the complex exponential as represented in Figure 3, left). To speed up this process, we study the replacement of this costly operation by any map $f$. We have as target a much cheaper binary operation, the one-bit universal quantization (i.e., the least significant bit of a uniform quantizer) which would allow to compute the sketch directly in hardware without having to acquire the high-resolution signals; see Figure 4. We showed that we can learn from the quantized sketch “as if nothing changed” with a formal guarantee that the resulting error will be relatively small .

Related References: [Entry not found - boreal:202890][Entry not found - boreal:265613]

Privacy-aware sketching

During sketching, the contributions of all samples are “averaged out”, and one individual record of the dataset becomes indistinguishable, drown into the other contributions (especially when the dataset cardinality $N$ becomes large). This makes sketching appealing for privacy-protecting learning; however, a formal proof of a well-defined privacy criterion is needed beyond this intuition, which is what we work on in this project.

We determined Differential Privacy (Figure 5, right) as the most relevant formal privacy criterion (intuitively, this means that we cannot distinguish from the sketch outcome whether one particular user is present in the dataset). We then proposed a differentially private sketching mechanism (Figure 5, left): the usual sketch is computed by a trusted device, that then adds a Laplacian noise before releasing the noisy sketch publicly. On several use cases (k-means clustering and Gaussian mixture modelling), this approach competes favorably with usual state-of-the-art (specialized) privacy-preserving mechanisms.

Related References: [Entry not found - boreal:216655]

Collaborators

- Antoine Chatalic (Irisa, Rennes, France)

- Rémi Gribonval (ENS, Lyon, France)

- Florimond Houssiau (Imperial College, London, UK)

- Yves-Alexandre de Montjoye (Imperial College, London, UK)

Getting more out of the sketch

Existing compressive learning research focused mainly on density estimation problems where the density can be easily estimated in closed form. However, many machine learning tasks cannot easily be translated into this formalism. We are thus trying to extend compressive learning to a broader class of tasks, such as generative network learning, nonnegative matrix factorisation, and supervised tasks such as classification. We propose a Monte-Carlo approximation to replace the closed-form density expressions in the first two instances, and to perform classification directly in the compressed domain in the latter.

Related References: [Entry not found - boreal:209861] [Entry not found - boreal:236933]