Body Part-based Representation Learning for Occluded Person Re-Identification

Introduction

Person re-identification, or ReID, is a retrieval task which aims at matching an image of a person-of-interest (the “query”), with other person images from a large database (the “gallery”).

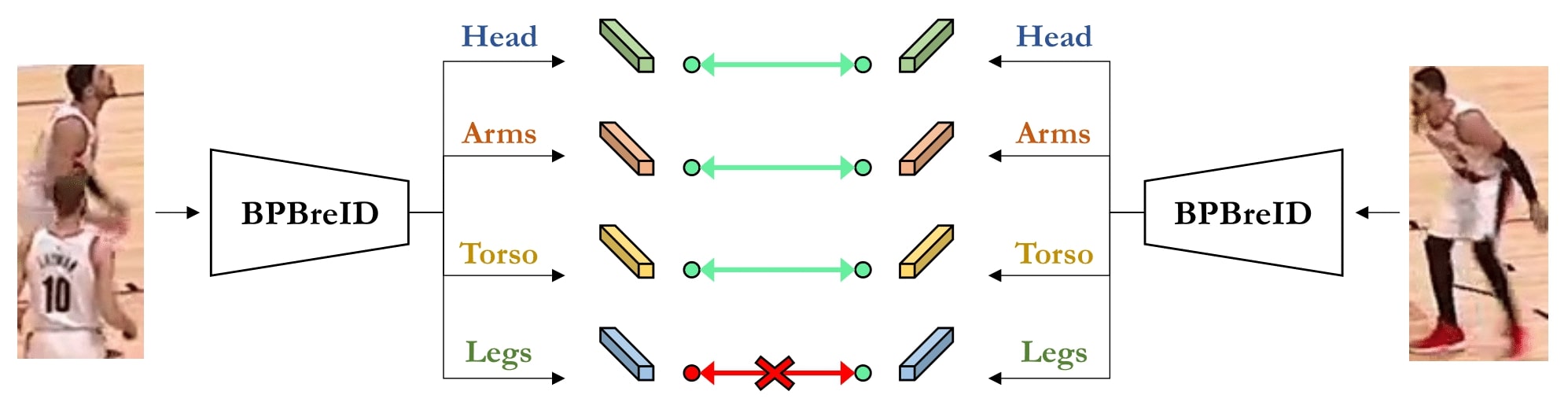

In this work, we propose BPBreID, a part-based method for occluded person re-identification.

We make two contributions:

- An attention module to extract one feature vector for each body part.

- The GiLt loss, a novel loss to train any part-based method.

Our code, poster, video are available on GitHub and paper on arXiv.

Motivations

Training part-based methods is challenging for two main reasons.

Our two contributions aim at addressing these challenges.

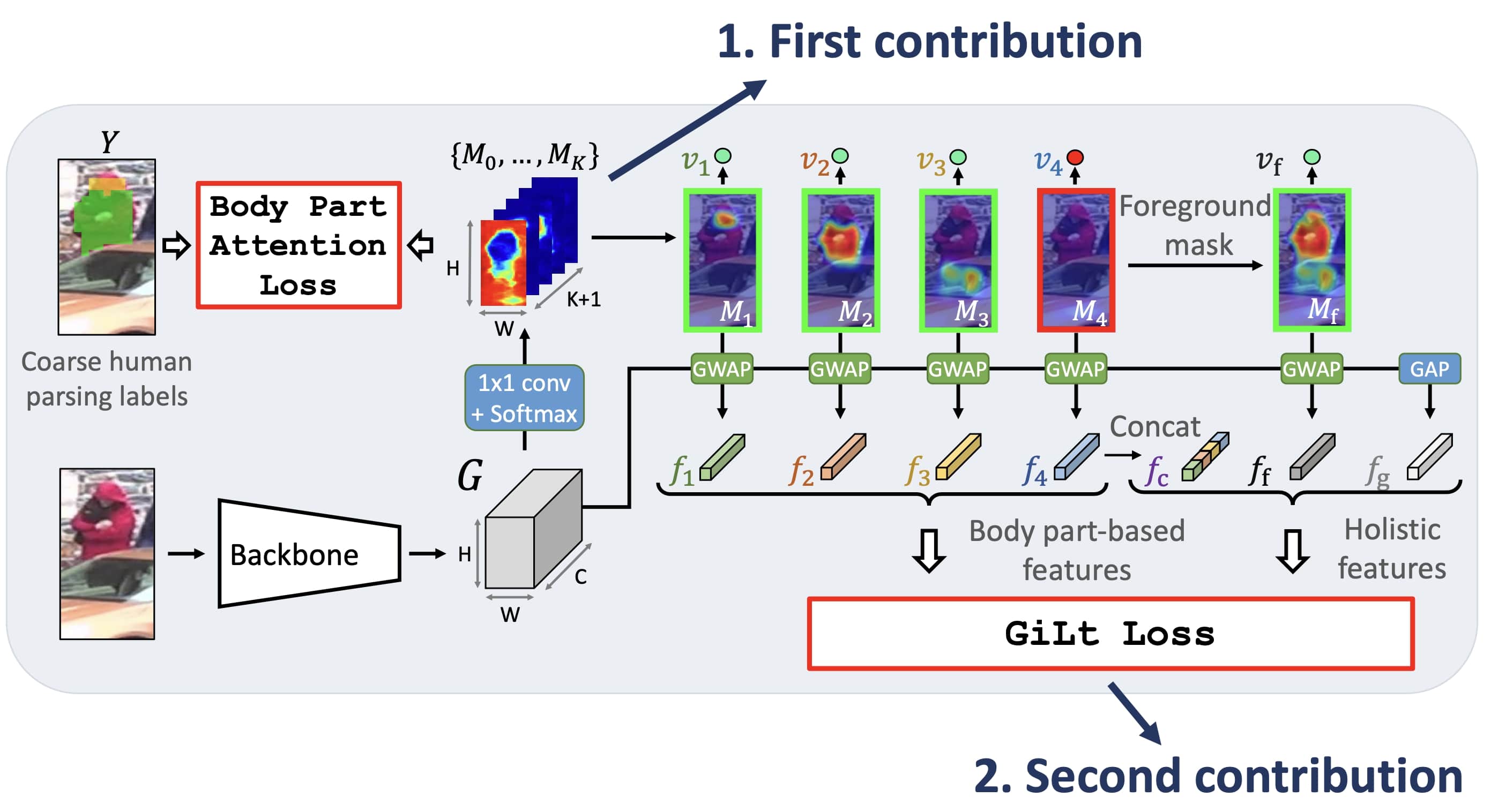

Our proposed method: BPBReID

Overview of the architecture of our model.

BPBreID outputs K body part-based embeddings and three holistic embeddings.

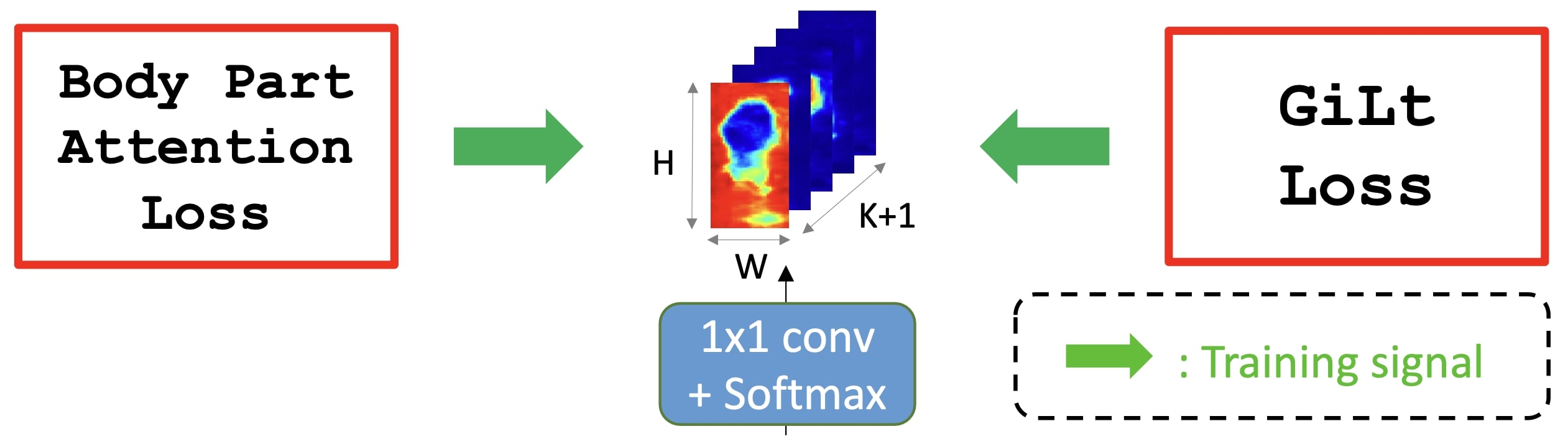

First contribution: The body parts attention module

Two training signals, from both the ReID objective and the part prediction objective.

Learned attention is more accurate and ReID specialized!

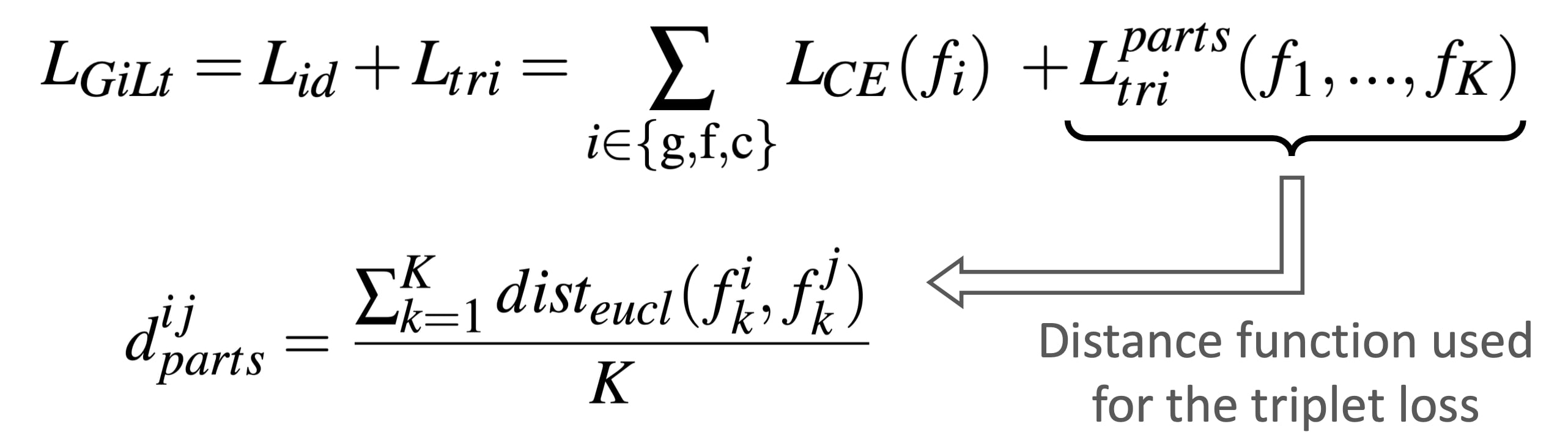

Second contribution: The GiLt loss

GiLt stands for Global-identity Local-triplet.

Robust to occlusions and non-discriminative parts, because has always access to global information for solving the Re-ID objective.

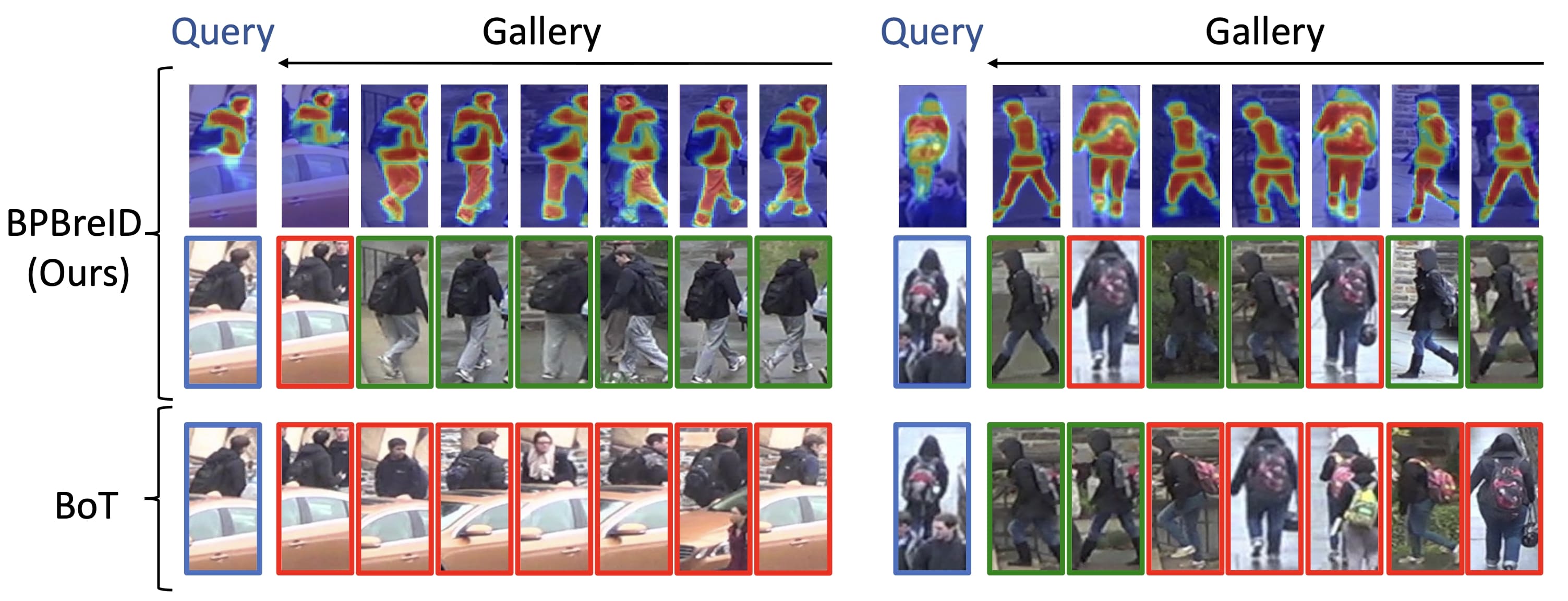

Visualizations

Here are some ranking compared to other methods: gallery samples are ranked according to their similarity to the query, with the top retrieved sample on the left.

As illustrated here, BPBReID is less sensitive to occlusions than previous methods.