Deep Learning for Anomaly Detection in Industrial Vision

Anomaly detection can be defined as the process of identifying rare items, events or observations that differ significantly from the majority of the data [Entry not found - chandola2009anomaly]. Such methods can be applied to a wide range of applications belonging to several areas: detection of bank frauds (financial field), pathological tissues [Entry not found - schlegl2017unsupervised] (biomedical field), manufacturing defects [Entry not found - staar2019anomaly] (industrial field), etc.

Why Opt for an Unsupervised Approach ?

At first sight, anomaly detection problem is shaped to be addressed with a classification based approach. Unfortunately, the training of such supervised models require collecting significant amount of labeled data, which is a massive undertaking. Moreover, the intrinsic specification of the anomaly detection problem makes the supervised approach inappropriate for two reasons:

- The scarcity of abnormal events makes normal vs abnormal classes highly unbalanced

- The appearance of abnormal samples can be highly variable

Therefore, unsupervised approaches, based on the sole observations of data statistics, are most appropriated schemes to address the anomaly detection problem in real world applications.

Reconstruction-based Approach

Anomaly detection in images can be tackled by a reconstruction-based approach. In this strategy an CNN, usually an autoencoder, is used to reconstruct abnormal regions of an image with normal structures seen during training. Then, a residual map obtained by measuring the difference between the input image and its reconstruction can be interpreted as the likelihood that a region does not belong to the normal class. In order to achieve this, all normal regions should remain unaltered, while the abnormal ones should be replaced by clean content.

Our Method

The approach involves the training of an autoencoder with only defect-free images. Traditionally, it is expected that the dimensionality reduction regularizes by itself the reconstruction onto the space of clean images.

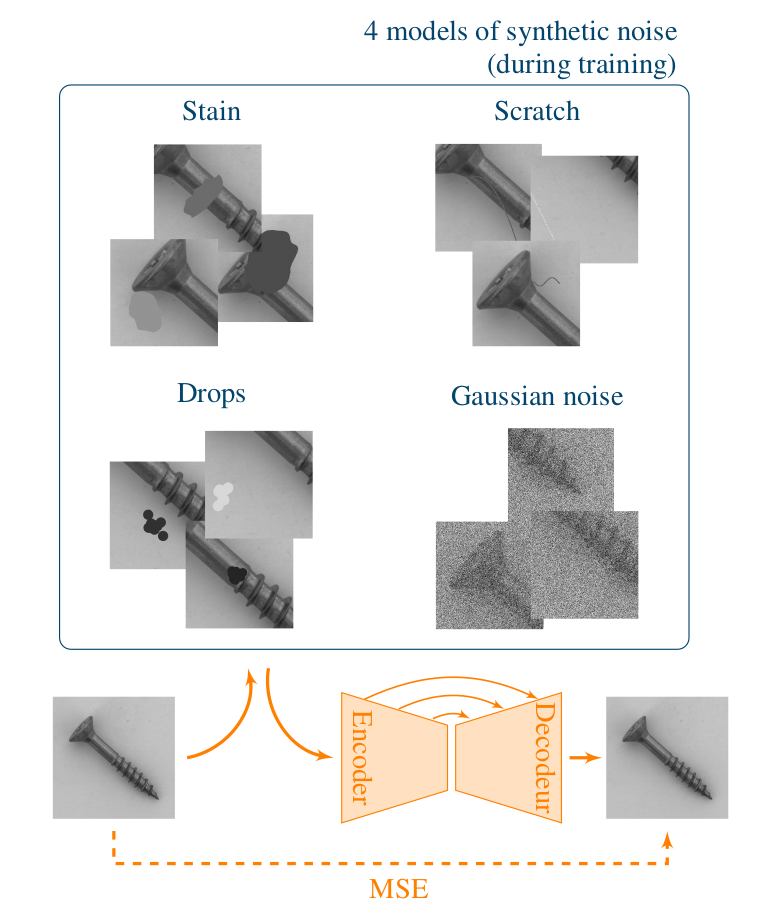

However, in order to enhance the reconstruction of (part of) the clean images, we add skip-connections to the autoencoder. At the same time, we apply corruption to the training images with the aim of preventing the convergence toward an identity mapping. Several models of synthetic noise are compared as shown in Figure 1.

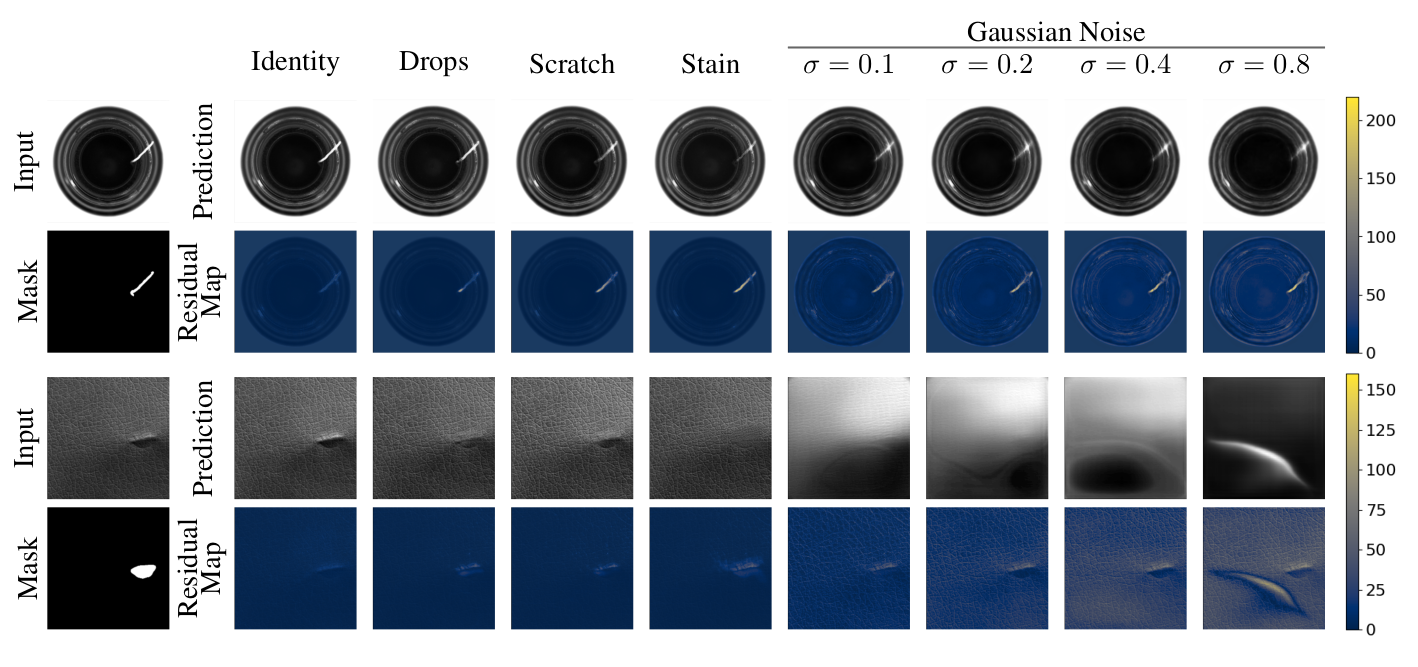

As seen in Figure 2 (example coming from MVTec AD dataset [Entry not found - bergmann2019mvtec]), we show that a structured stain-shaped noise, independent of the image content, combined with the addition of skip-connections to the autoencoder leads to better reconstruction and hence better anomaly detection.

On the object (up row), we observe that a stain shaped corruption leads to the best reconstruction of the defective region, even if the defect looks like a scratch. It indicates that the synthetic noise generalizes well to detect real anomalies.

The reconstruction of finely textured surface is also enhanced thanks to the skip-connections. However, a non structural noise such as the Gaussian noise model performs poorly on textures.

Overall, the combination of skip-connections and a stain-shaped synthetic noise leads to the best reconstruction.